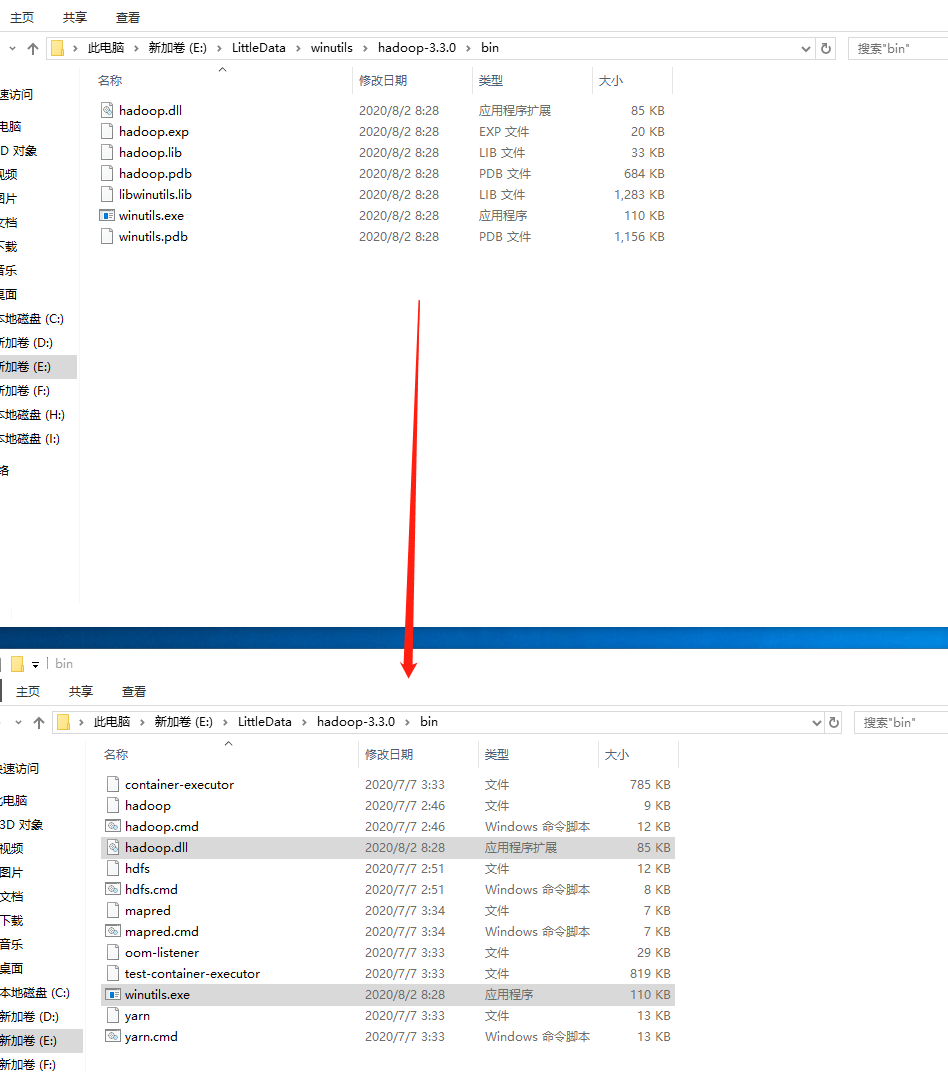

Thanks a lot, just what i was looking for – user373201 Feb 27 '17 at 2:59 Share improve this answer follow edited Jan 11 '17 at 6:28Ģ1.5k1313 gold badges7575 silver badges100100 bronze badges Now add tProperty("", "PATH/TO/THE/DIR") in your code. Share improve this answer follow edited Aug 21 '17 at 7:09 Kenny John JacobĪlso, if you have a cmd line open, restart it for the variables to take affect. – eych Aug 21 '19 at 16:51Ĭreate a bin folder in any directory(to be used in step 3).ĭownload winutils.exe and place it in the bin directory.

#Download winutils.exe download

I have to set HADOOP_HOME to hadoop folder instead of the bin folder. – Stanley Aug 29 '16 at 7:44Īlso, be sure to download the correct winutils.exe based on the version of hadoop that spark is compiled for (so, not necessarily the link above). Share improve this answer follow edited Apr 9 '19 at 15:51Ĥ,96711 gold badge1616 silver badges2828 bronze badges tProperty("", "full path to the folder with winutils") SetUp your HADOOP_HOME environment variable on the OS level or programmatically: Here is a good explanation of your problem with the solution. Java.io.IOException: Could not locate executable null\bin\winutils.exe in the Hadoop binaries.Īt .Shell.getQualifiedBinPath(Shell.java:278)Īt .Shell.getWinUtilsPath(Shell.java:300)Īt .Shell.(Shell.java:293)Īt .StringUtils.(StringUtils.java:76)Īt .tInputPaths(FileInputFormat.java:362)Īt $$anonfun$hadoopFile$1$$anonfun$33.apply(SparkContext.scala:1015)Īt .HadoopRDD$$anonfun$getJobConf$6.apply(HadoopRDD.scala:176)Īt .HadoopRDD.getJobConf(HadoopRDD.scala:176)Īt .HadoopRDD.getPartitions(HadoopRDD.scala:195)Īt .RDD$$anonfun$partitions$2.apply(RDD.scala:239)Īt .RDD$$anonfun$partitions$2.apply(RDD.scala:237)Īt (Option.scala:120)Īt .RDD.partitions(RDD.scala:237)Īt .MapPartitionsRDD.getPartitions(MapPartitionsRDD.scala:35)Īt .runJob(SparkContext.scala:1929)Īt .RDD.count(RDD.scala:1143)Īt $.main(FrameDemo.scala:14)Īt .main(FrameDemo.scala)Įclipse scala apache-spark share improve this question follow edited Jan 30 '17 at 20:56 Glenn Slaydenġ3.8k33 gold badges8383 silver badges9595 bronze badgesĩ7011 gold badge99 silver badges1212 bronze badgesĪdd a comment 12 Answers Active Oldest Votes val conf = new SparkConf().setAppName("DemoDF").setMaster("local")Įrror: 16/02/26 18:29:33 INFO SparkContext: Created broadcast 0 from textFile at FrameDemo.scala:13ġ6/02/26 18:29:34 ERROR Shell: Failed to locate the winutils binary in the hadoop binary path

#Download winutils.exe windows 7

I'm not able to run a simple spark job in Scala IDE (Maven spark project) installed on Windows 7 标签: locate bin executable scala hadoop winutils apache org spark

0 kommentar(er)

0 kommentar(er)